Batch Prediction

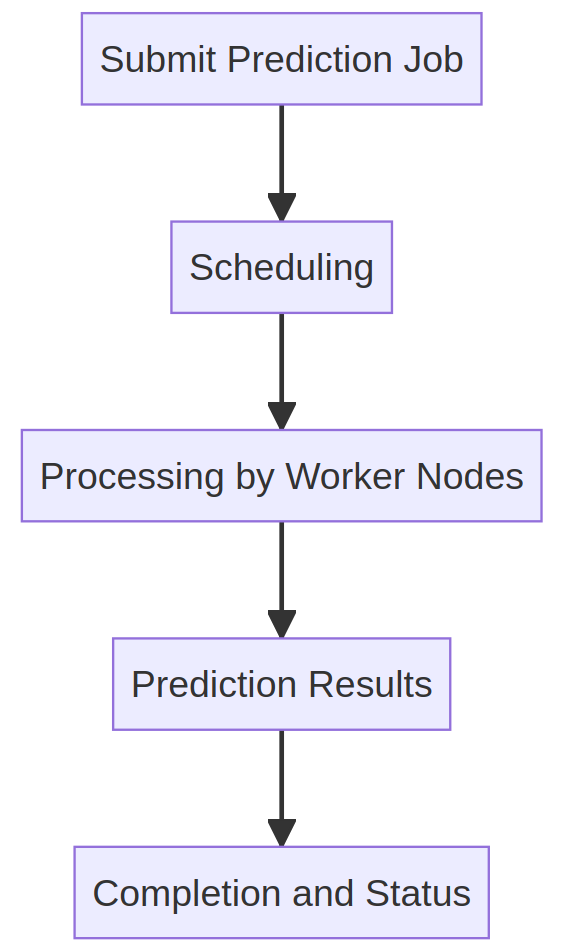

The Batch Prediction module enables users to run large-scale predictions across datasets in a structured and efficient manner. It leverages parallel processing through worker nodes and provides real-time tracking of prediction status.

Overview

You can access this module under:

Home > Playground > Batch Prediction

Key Steps:

1. Submit a Prediction Job

- Select the Batch Prediction tab from the left-side menu in the Playground interface.

- Click the + New Batch Prediction button.

- Fill out the required fields:

- Select Model: Choose a deployed model.

- Select Dataset: Choose a dataset already registered on the platform.

- Select Series: Pick specific series within the dataset (if needed).

- Prediction Settings: Customize model-specific options (e.g., slice ranges, label types).

- Click Submit to start the batch prediction job.

2. Monitor Progress

- After submission, the job will appear in the Batch Prediction Table.

- The following columns are available for each job:

- ID: Job identifier.

- Model / Dataset: References to the model and dataset used.

- Started by: The user who launched the job.

- Progress: Displays current progress as a percentage.

- Start / End Time: Timestamps for job lifecycle.

- Status: Indicates current job status (e.g.,

Queued,In Progress,Completed,Failed).

- Progress bar updates in real-time as worker nodes complete predictions.

3. Result Access

- Once completed, click the corresponding View Results icon to:

- Inspect per-series predictions in the annotation viewer.

- Navigate to generated annotations and visual outputs.

- Launch validations if desired on the predicted results.

4. Additional Notes

- You can run multiple batch predictions simultaneously.

- Jobs are scheduled and distributed automatically based on platform resources.

- Failed jobs will display a red status and can be retried.

- Detailed logs and job metadata are available per batch for auditing and debugging purposes.

This module is ideal for evaluating model performance at scale, preparing annotations for validation, or generating predictions across longitudinal patient studies.